Introducing Open AI Sora

Open AI Sora just released which is a text-to-video model and the results are mindboggling first before we get into this I want you to see some of the results

now a lot of people on Twitter were going hey this is going to disrupt Hollywood This is going to make making movies and films so much cheaper

but I think there was something that was missed by almost everybody and I want to touch on it here is an official statement from their paper Sora serves as a foundation for models that can understand and simulate the real world a capability We Believe will be an important milestone in achieving AGI see

what we learned from the text and doing llms is that if you are able to put enough data in there and put enough compute in there you are able to predict the next word.

Text-to-Video Model’s Understanding of Physics

According to thoughts of one of the ex-co-founders of Open which is Ilia anything that can predict the next world has a model of that world and has some understanding of that world if you now have something that can predict the next frame of a video then it has an understanding of the world

and not just a simple understanding but an understanding of things like physics and understanding of things like how light rays bounce all of the things that are actually computed on things like game engines not surprising it looks to be that lots of the training data that went into Sora was actually game engine footage and 3D environments so we see a text to video model that can generate lots of stock footage and there are lots of benefits of that we’ll talk about it

but what I see is We Are One Step Closer to artificial general intelligence which is this thing that is able to model and understand the real world Now understand might be a Loosely used word here because it might not be humanlike understanding but there is definitely something in there and guess.

Requiring Massive Compute for High-Quality Video

Just scale Transformers to make this happen the same underlying technology behind chat GPT applied to video obviously with a slightly different set of techniques but with lots of computational power behind it in fact

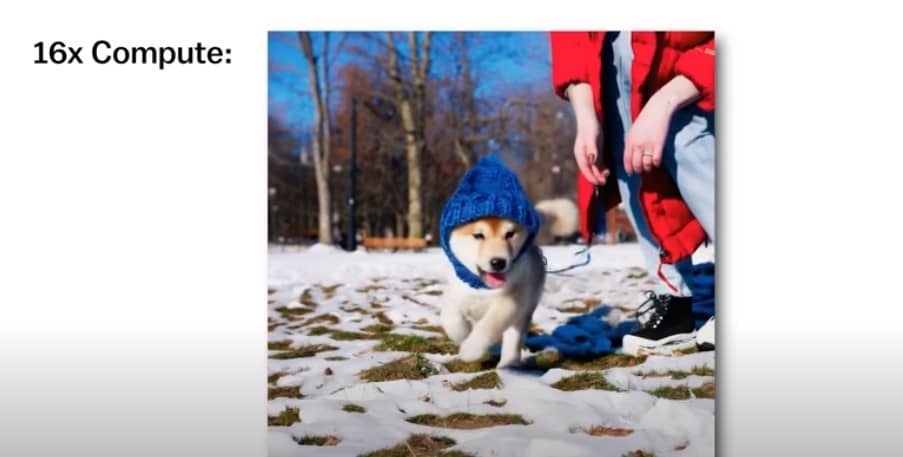

I was experimenting with some text-to-video models I thought 3 months ago well they might be an interesting commercial opportunity in making my own texture video models you can check out one of the slightly sad results here

but it turned out that with Sora some of the earlier results with lower amounts of compute were producing garbage similar to this but as you added more compute it got better and better and smarter and smarter

so Sam Alman’s idea of going out and raising so many trillion dollars might not be impossible after all but it’s not the first time that somebody has.

General World Models

That well training a video model to understand video actually gives you an understanding of the world the company Runway ml which also runs a set of text-to-video tools has this to say we believe that the next major advancement in AI will come from systems that understand the visual world

and its Dynamics which is why we’re starting a new long-term research effort around what we call General World models a world model is an AI system that builds an internal presentation of an environment and uses it to simulate future events within that environment research in World models has

so far been focused on very limited and control settings either in toy-simulated worlds or narrow contexts such as developing World models for driving now those are all great long-term things to ponder on but let’s talk about the immediately how this text-to-video model thing actually works how does it help you so you put in a prompt and it generates something for you.

Issues With Sora

now I’ll tell you the implications of this a lot of content creators and editors of course in the short term are going to use this to make lots of high-quality b-rolls It still can’t make a full movie yet not just

because there are issues like I’ll show you a specific issue okay take a look at this old woman celebrating her birthday now zoom in a little bit on this lady in the back with her hands there are still lots of issues with the things

so you can’t probably use it in serious production you can’t use it for a Hollywood film but that will get better that part will get better and as we’ve seen it’s getting better really fast maybe we’ll see extremely crazy stuff in 6 months

but what is more important and if you look at some of the prompts that are put in in the results it’s not very accurate to The Prompt it is generating something which is very believable but it’s probably not exactly what you prompted into it

for example, if you ask it hey I want a video of Batman beating up two goons and then eating a sandwich it’ll generate something very believable and very nice even if there are issues with the physics and some weird backward movement right

now that’s probably going to be fixed the problem is you don’t have any control over this every time you generate it the Batman generator will be different his costume will be different the two goons will be different different all of those parts will be different

because it’s generating everything from scratch as well and the consistency in stable diffusion-type models has improved there are still differences if you try to generate an image of Batman and then use that as a reference to generate other consistent characters there are still sufficient variations

between the two Batmen where it doesn’t seem like it’s from the same image set in fact with videos, this is even more evident when you can think of any video script in your head maybe a 5-minute or 6-minute video and then the minute you start writing prompts for it you have a problem the problem with that is it’s

not fully descriptive and in that text there is some loss there are some things it’s not picking up from the scene and therefore when that same prompt is sent to generate an image or a video it generates something slightly different.

Open AI Sora’s Current Benefit for B-Roll Footage

Open AI Sora seems very useful for B rolls right if you’re making a video you want some stock footage in fact somebody from the internet took a SORA video and made the lips move to a particular audio track which is fantastic the Matrix is a system Neo that system is our enemy

when you’re inside you look around and you see businessmen teachers lawyers Carpenters the very minds of the people.

Possibility of integrating yourself into high-quality Text-to-Video on media

We are trying to save in fact with a technology called IP adapter which I use a lot with images especially for our thumbnails you will notice that earlier it used to be able to replicate your facial features

now with an IP adapter Face Plus it’s also able to replicate your jawline your face structure and your hair

So you could be able to make high-quality scenes of Superman or Shaktiman or whatever it is with you in it and you talking and a lot of people think that Hollywood will be dead or stuff like that I don’t think that’s going to happen because we kind of already have that technology.

Opportunities for Smaller/Independent Creators

If you’re not already working in Hollywood or some big production house and you have a lower budget you have a cheap camera you have a not-so-good computer this is the best time for you

because a video editor for example would always be dependent on some Creator or somebody else to generate footage for them they would need somebody to shoot you need an expensive camera for that and that footage comes to the editor where the editor makes a narrative.

Final Thoughts

When this technology becomes open source and in 6 months to a year somebody will make an open source version of this and you can use technology like that IP adapter face to make it look like you and have the same jawline and hair and nose and everything

and it’s actually you it feels like you it talks like you we’re able to mimic almost every part you realize that in that footage by that time the physics would have been sorted

So it’ll actually move like a real human it’ll talk like a real human it’ll feel like a real human and if you really keep extrapolating that then one day you might realize that I want to make my own movie

where I’m the hero and I go through these trials and tribulations and this is what happens in the world and here’s how I live my life and I want to watch that movie at what point and this is an Alan WS quote right at what point do you decide that if you can dream Any Dream you dream the one that you are in today where you are the main character you’re going through everything but the sad truth is you might just be the result of $30 of tokens somewhere in some Computing Center in some part of the world that’s it for me.